Today wars are fought on digital battlegrounds like Facebook and Twitter. The weapons used to wage these wars don’t kill people like guns do but are equally dangerous: fake news has been reported to have influenced the 2016 US presidential election.

Three years before that, the World Economic Forum designated massive digital misinformation (i.e. fake news) as a major technological and geopolitical risk, but I guess the world needed to see it to believe it.

If fake news can cause damage at such a large scale, what about fake videos of real people?

It is possible to make fake videos of real people with the help of AI

AI-based technology allows you to do pretty much anything these days. From writing news stories to making restaurant bookings to creating movie trailers. The possibilities are virtually endless.

Before 2017 faking a real person hadn’t been done yet, but it was technically possible. The journey towards making a fake video of a real person started with Google Duplex, the virtual assistant which carries out conversations in a human-like voice. The next step in this journey was to take it from audio to video. This technology development was achieved by computer scientist and Google engineer Supasorn Suwajanakorn.

As a grad student, Supasorn Suwajanakorn used AI and 3D modelling to create realistic videos of real persons synced to audio. By closely observing existing footage and photos that anyone can find on the internet, Supasorn developed a tool which mimics the way a person talks.

Watch Supasorn show how this tool works:

Reality Defender fights against fake photos and videos

As he says in his speech, Supasorn realized the ethical implications and foresaw how his tool could be used if fallen into the wrong hands.

To fight against the misuse of his tool, Supasorn created Reality Defender in collaboration with AI Foundation.

AI Foundation is a non-profit & a for-profit organization working to move the world forward with AI responsibly.

Reality Defender / aifoundation.com

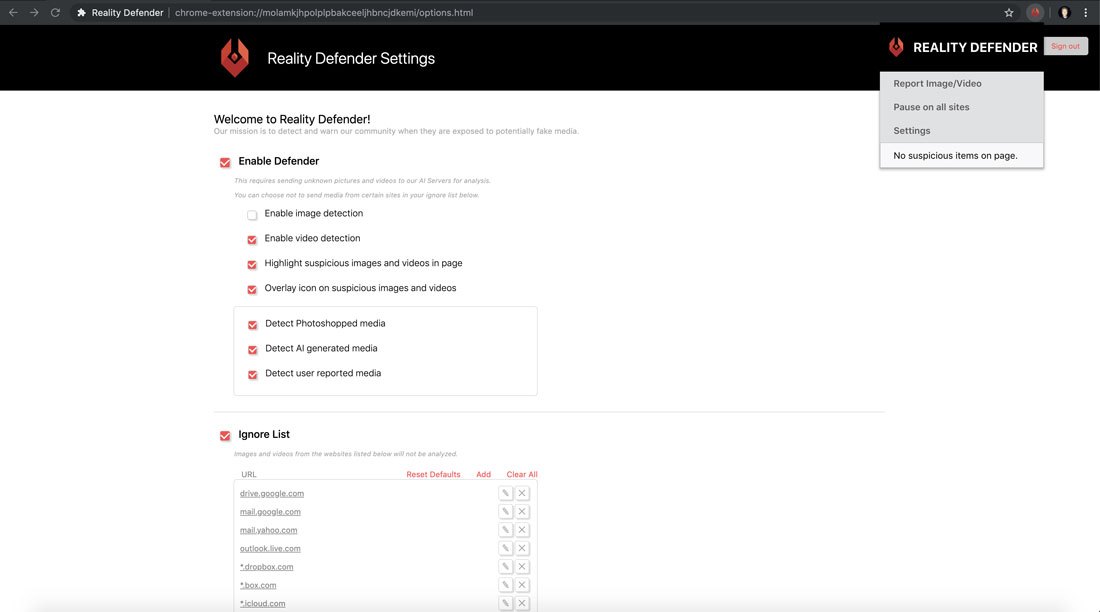

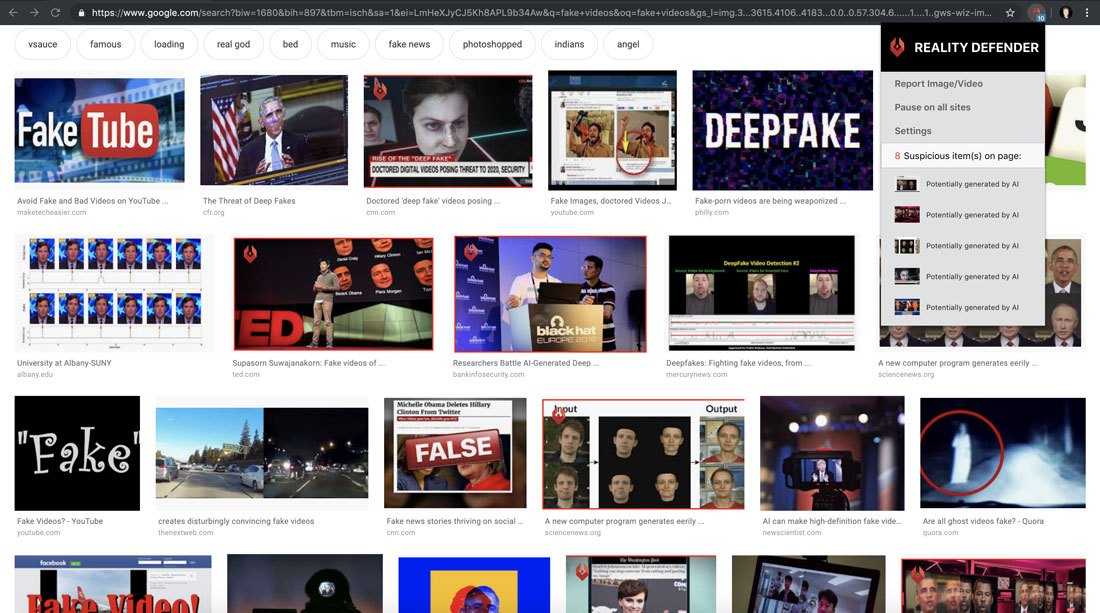

Reality Defender is intelligent software built to detect potentially fake media.

The tool is similar to virus protection, in that it scans every image, video, and other media that a user encounters when browsing the internet.

Reality Defender / aifoundation.com

It reports of suspected fakes and runs new media through various AI-driven analysis techniques to detect signs of alteration or artificial generation.

Why is this important?

We rather expect fashion images and public person images online to be altered but we expect news images to be a snapshot of reality.

Altered news images have significant and dangerous consequences such as audience manipulation and distortion of the truth.

Remember Wag the Dog, the movie where a Hollywood producer fabricates an entire war in Albania using video and sound effects to distract the media’s attention from a Presidential crisis?

Join the Conversation

We’d love to hear what you have to say.

Get in touch with us on Facebook Group and Twitter.